Last Updated on 09/13/2020 by dboth

Author’s Note: Much of this article first appeared at Enable Sysadmin.

Many of you have been using solid state disk (SSD) devices to replace the venerable hard drive with physical spinning disks (HDDs) for a long time now. I have been too, actually, but only because the System76 Oryx Pro laptop I purchased a couple years ago came with SSDs as the primary storage option. Whenever I boot my laptop – which is not frequently because I usually let it run 24×7 for various reasons – it surprises me how quickly I get to a login prompt. All of my other physical hosts boot more slowly from their spinning disk hard drives.

Don’t get me wrong. I like my computers with fast processors, with lots of CPUs, and large amounts of RAM. But I have this problem. People gift me their old computers and I dismantle them for parts including hard drives. Few people are currently discarding systems with SDDs and I expect that will not change for a while.

I have managed to use those older systems until the motherboard or something else that is irreplaceable on it dies. At that point I take the remaining unusable components of the defunct system to the local electronics recycling center, but I keep any usable components including hard drives. So I end up with plenty of old hard drives, some of which I use to keep some of those older systems going for a while longer. The rest just sit in a container waiting to be used.

I hate to discard perfectly usable computer parts. I just know that someday I will be able to use them and I like to try to keep computers and parts out of the recycling process so long as they are useful. And I have found places to use most of those older bits including most of those old hard drives.

Why SSD?

The primary function of both HDD and SSD devices is to store data in a non-volatile medium so that it will not be lost when the power is turned off. Both drive technologies store the operating system, application programs, and your data so that they can be moved into main memory, (RAM) for use. The functional advantages of SSD over HDD are twofold and both are due to the solid state nature of the SSD.

First, SSDs have no moving parts to wear out or break. I have had many HDDs fail over the years; I use them hard and the mechanical components wear out over time.

The second advantage to SSDs is that they are fast. Due to their solid state nature, SSDs can access any location in their memory at the same speed. they do not need to wait for mechanical arms to seek to the track on which the data is stored and then wait for the sector containing the data to rotate arrive under the read/write heads to be read. These seek and rotational latencies are the mechanical factors that slow access to the data. SSDs have no such mechanical latencies to slow them down. SSDs are typically 10x faster when reading data and 20x faster when writing.

Other non-performance-related advantages for SSDs over HDDs are that SDDs use less energy, they are smaller and weigh less than HDDs, and they are less likely to be damaged if dropped.

In many respects SSDs are like USB thumb drives which are solid state devices and use essentially the same flash memory storage technology.

The primary drawbacks to SSD devices are that they are more expensive for a given storage size than HDDs and HDDs have a greater maximum capacity than SSDs, currently about 14TB for HDDs and 4TB for SSDs. These gaps are narrowing. Another issue with SSDs is that their memory cells can “leak” and degrade over time if they are not kept powered. The degradation can result in data loss after about a year of being stored without power making them unsuitable for off-line archival storage.

There are a number of interesting and informative articles about SSDs at the Crucial and Intel web sites. To be clear, I like these pages for their excellent explanations and descriptions; I have no relationship of any kind with Crucial or Intel other than to purchase at retail some of their products for my personal use.

SSD types

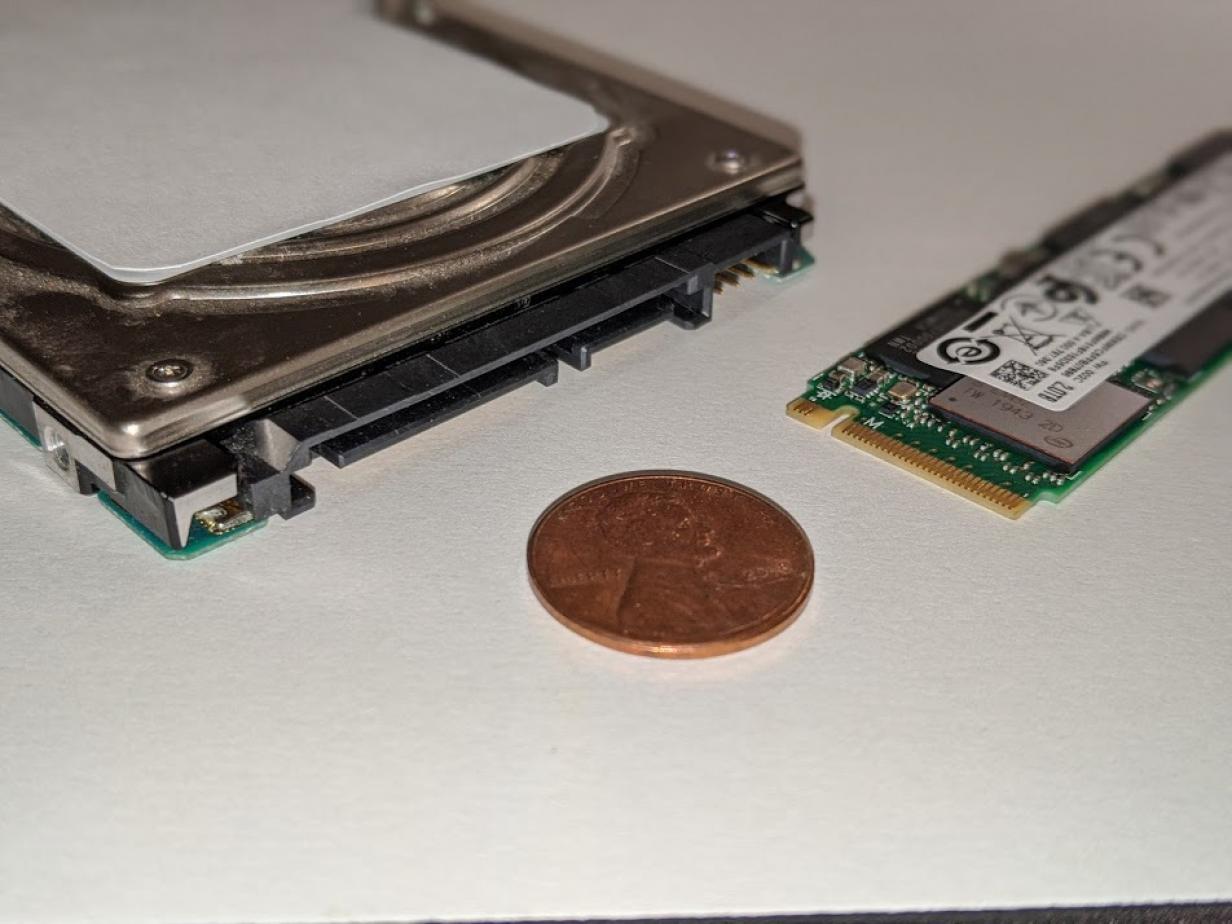

There are two common SSD form factors and interfaces. One is a direct replacement for hard disk drives and uses standard SATA power and data connectors and can be mounted in a 2.5” drive mounting bay. SATA SSDs are limited to the speed of the SATA bus which is a maximum of 600Mb/s.

The other form factor uses an M.2 PCIe connector that is typically mounted directly on the motherboard. The ASUS TUF X299 motherboard in my primary workstation has two of these connectors. The physical form factor for M.2 SSDs is 22mm wide with varying lengths up to about 80mm. M.2 devices can achieve speeds of up to 40Gb/s read due to the direct connection to the PCI bus.

Figure 1: Two SSD devices, SATA on the left and m.2 on the right, with a penny for size comparison. Photo by Jason Baker. CC-by-SA.

There are also multiple types of memory technology used in SSD devices. NVMe (Non-Volatile Memory express) is the fastest.

My SSD

Several weeks ago I purchased an Intel 512GB m.2 NVMe SSD for a customer project that never fully matured. I ran across that SSD while looking through my few remaining hard drives and realized that all of my few remaining hard drives except for one were old, used multiple times, and probably close to a catastrophic failure. And then there was SSD which was brand new.

Did I mention that my laptop boots really, really fast? And my primary workstation did not.

I have also wanted to do a complete Fedora reinstallation for a few months because I have been doing release upgrades since about Fedora 21. Sometimes doing a fresh install to get rid of some of the cruft is a good idea. All things considered, it seemed like a good idea to do the reinstall of Fedora on the SSD.

My plan was to install the SSD in one of the two m.2 slots on my ASUS TUF X299 motherboard and install Fedora on it, placing all of the operating system and application program filesystems on it, /boot, /boot/eufi, / (root), /var, /usr, and /tmp. I chose not to place the swap partition on the SSD because I have enough RAM that the swap partition is almost never used. Also, /home would remain on its own partition on an HDD.

I powered off, installed the SSD in one of the m.2 slots on the motherboard, and booted to a Fedora Xfce live USB drive, and did a complete installation. I chose to manually create the disk partitions so that I could delete the old operating system partitions, /boot, /boot/eufi, / (root), /var, /usr, and /tmp. This also allowed me to create mount points – and thus entries in /etc/fstab – for partitions and logical volumes I wanted to keep untouched and use in the new installation. This capability is one reason I create /home and certain other partitions or logical volumes as separate filesystems.

The installation went very smoothly. After this I ran a Bash program I wrote to install and configure various tools and application software. That also went well – and fast – very fast.

The results

I do wish I had timed the startup before and after but I didn’t think of it before I started this project. I only got the idea to write this article after I had already passed the point of no return with respect to timing the original bootup. But my workstation starts up significantly faster than before based on my purely subjective experience. Programs such as LibreOffice, Thunderbird, Firefox, and others load much faster because the /usr volume is now on the SSD.

I also find that searching for and installing RPM packages is faster than it was before the upgrade. I think that at least part of this improvement to the speed at which the local RPM database and DNF repository files can be read.

I am quite happy with the significant speed increase.

Other considerations

There are plenty of discussions on the web about formatting SSD devices. Most of them revolve around using the so-called “quick format” rather than a long (a.k.a. full) format. The quick format simply writes the filesystem metadata while the long format writes empty sector data including thing like sector numbers to each sector of the partition. That is why it takes longer and, according to vendors, that also reduces the life expectancy of the SSD.

Creating a Linux filesystem such as EXT4 is a process similar to a quick format because it only creates and writes the filesystem metadata. There is no need to be concerned about selecting long vs short format because there is no such thing as a long format in Linux. However, the Linux shred command which is used to erase and obscure data, would very likely cause the same problems attributed to the long format but without actually overwriting the existing data on the SSD; the reason for this is beyond the scope of this article.

It is necessary to perform some regular maintenance on SSDs. My interpretation of the problem is that before SSD memory cells can be written, they must first be set to an “available” state. This takes time and so writing a new or altered data sector is simply performed by writing the entire data for a sector to a new sector on the device and marking the old sector as inactive. However, “deleted” sectors cannot be used because the old data still exists and the memory cells in that sector must be reset to an available state before new data can be written to them. There is a tool called “trim” that performs this task.

Linux provides trim support with the fstrim command and many SSD devices contain their own hardware implementation of trim for operating systems that do not have it. Trim is enabled for Linux filesystems using the mount command or entries in the /etc/fstab configuration file. I added the “discard” option to the SSD filesystem entries in my fstab file so that trim would be handled automatically.

/dev/mapper/vg01-root / ext4 discard,defaults 1 1 UUID=d33ac198-94fe-47ac-be63-3eb179ca48a3 /boot ext4 discard,defaults 1 2 /dev/mapper/vg_david3-home /home ext4 defaults 1 2 /dev/mapper/vg01-tmp /tmp ext4 discard,defaults 1 2 /dev/mapper/vg01-usr /usr ext4 discard,defaults 1 2 /dev/mapper/vg01-var /var ext4 discard,defaults 1 2

Using the command line to mount an SSD device would look like this.

mount -t ext4 -o discard /dev/sdb1 /mnt

For the most part, trimming takes place without user intervention during periods when the drive is otherwise inactive. The process is called “garbage collection” and it makes unused space available for use again. It cannot hurt to perform trim as a regular maintenance task so the util-linux package which is part of every distribution provides systemd fstrim.service and fstrim.timer units to run once per week.

The fstrim command can also be used to manually initiate a trim on one or more filesystems. After the first trim which may show large byte counts, you will probably see very low counts and even zero most of the time.

<snip> [root@david ~]# fstrim -v / /: 9.7 GiB (10361012224 bytes) trimmed [root@david ~]# fstrim -v /usr /usr: 40.4 GiB (43378610176 bytes) trimmed <snip>

I suggest running trim once on each SSD filesystem after installing and creating them. The Crucial web site has a good article with more information on trim and why it is needed.

Defragmenting an SSD is not required and could also contribute to a reduced lifespan. Defragmentation is irrelevant for SSDs because it is intended to speed access to files by making them contiguous. With SSDs access to all storage locations is equally fast. Besides, defragmentation is almost never necessary even with HDDs because most modern Linux filesystems, including the default (for most distros) EXT4 filesystem, implement data storage strategies that reduce fragmentation to a level that makes defragmenting a waste of time.

Migrating a swap partition

A few weeks after I performed the initial migration I decided to install another M.2 SSD in the second slot on my motherboard. I moved my home directory to the new SSD, turned off swap, deleted the old swap volume, and created a new 10GB swap volume on the original SSD because there was still plenty of space there.

Of course, I had to change the entry in /etc/fstab to reflect the new location for swap.

/dev/mapper/vg01-swap none swap discard,defaults 0 0

I turned swap back on and all was good – until I rebooted. The startup sequence – when systemd takes over – locked up at about 2.6 seconds after starting. A bit of investigation showed that the /etc/defaults/grub local configuration file still contained a reference to the old swap location in the Linux kernel option line.

I changed that line to the following, rebooted and all was well.

GRUB_CMDLINE_LINUX="resume=/dev/mapper/vg01-swap rd.lvm.lv=vg01/root rd.lvm.lv=vg01/swap rd.lvm.lv=vg01/usr"

Final thoughts

I am enjoying the significantly faster startup and program load times that have resulted from moving to an SSD for my system. Using SSD devices requires a bit of thought and planning as well as some ongoing maintenance but the benefits are well worth the effort.

Use of an SSD for the Linux operating system filesystems will reduce the time it takes for a host to perform boot, startup, and load programs but it cannot do so for BIOS startup and initialization. BIOS is stored on a chip on the motherboard and is not affected by the use of an SSD.

Additional resources

Linux Journal, Data in a Flash, Part I: the Evolution of Disk Storage and an Introduction to NVMe, https://www.linuxjournal.com/content/data-flash-part-i-evolution-disk-storage-and-introduction-nvme