Last Updated on 04/02/2018 by dboth

This article was first published at Opensource.com as An introduction to Linux’s EXT4 filesystem.

In previous articles about Linux filesystems, I wrote about An introduction to Linux filesystems, and some of the higher-level concepts such as Everything is a file. I want to go into more detail about the specifics of the EXT filesystems but first, let’s answer the question, “What is a filesystem?” A filesystem is all of the following.

- Data Storage – A structured place to store and retrieve data; this is the primary function of any filesystem.

- Namespace – A naming and organizational methodology that provides rules for naming and structuring data.

- Security model – A scheme for defining access rights.

- API – System function calls to manipulate filesystem objects like directories and files.

- Implementation – The software to implement the above.

This article concentrates on the first item in the list and explores the metadata structures that provide the logical framework for data storage in an EXT filesystem.

EXT Filesystem history

Although written for Linux, the EXT filesystem has its roots in the Minix operating system and the Minix filesystem which predate Linux by about five years, having been first released in 1987. Understanding the EXT4 filesystem is much easier if we look at the history and technical evolution of the EXT filesystem family from its Minix roots.

Minix

When writing the original Linux kernel, Linus Torvalds needed a filesystem and did not want to write one at that point. So he simply included the Minix filesystem which had been written by Andrew S. Tanenbaum and which was a part of Tanenbaum’s Minix operating system. Minix was a Unix-like operating system written for educational purposes. Its code was freely available and was appropriately licensed to allow Torvald’s inclusion of it in his first version of Linux.

Minix has the following structures most of which are located in the partition in which the filesystem is generated.

- A boot sector in the first sector of the hard drive on which it is installed. The boot block includes a very small boot record and a partition table.

- The first block in each partition is a superblock which contains the metadata that defines the other filesystem structures and locates them on the physical disk assigned to the partition.

- An iNode bitmap block that is used to determine which iNodes are used and which are free.

- The iNodes which have their own space on the disk. Each iNode contains information about one file, including the locations of the data blocks, i.e., zones belonging to the file.

- A zone bitmap to keep track of the used and free data zones.

- The Data zone in which the data is actually stored.

For both types of bitmaps, one bit represents one specific data zone or one specific iNode. If the bit is zero the zone or iNode is free and available for use while if the it is one, the data zone or iNode is in use.

What is an iNode? Short for index-node, an iNode is a one 256 Byte block on the disk that stores data about the file. This includes the size of the file, the user IDs of the user and group owners of the file, the file mode, i.e., the access permissions, three timestamps specifying the time and date that the file was last accessed and modified and that the data in the iNode itself was last modified.

The iNode also contains data that points to the location of the file’s data on the hard drive. In Minix and the EXT1-3 filesystems, this is in the form of a list of data zones or blocks. The Minix filesystem iNodes supported 9 data blocks, seven direct and two indirect. There is an excellent PDF with a detailed description of the Minix filesystem structure here and a quick look at the iNode pointer structure here.

EXT

The original EXT filesystem (Extended) was written by Rémy Card and released with Linux in 1992 in order to to overcome some size limitations of the Minix filesystem. The primary structural changes were to the metadata of the filesystem which was based on the Unix filesystem, UFS, which is also known as the Berkeley Fast File System or FFS. I found very little published information about the EXT filesystem that can be verified, apparently because it had significant problems and was quickly superseded by the EXT2 filesystem.

EXT2

The EXT2 filesystem was quite successful and was used in Linux distributions for many years. It was the first filesystem I encountered when I started using Red Hat Linux 5.0 back in about 1997. The EXT2 filesystem has essentially the same metadata structures as the EXT filesystem, however EXT2 is more forward-looking in that much disk space is left between the metadata structures for future use.

Like Minix, EXT2 has a boot sector in the first sector of the hard drive on which it is installed which includes a very small boot record and a partition table. Then there is some reserved space after the boot sector which spans the space between the boot record and the first partition on the hard drive which is usually on the next cylinder boundary. GRUB2 – and possibly GRUB1 – uses this space for part of its boot code.

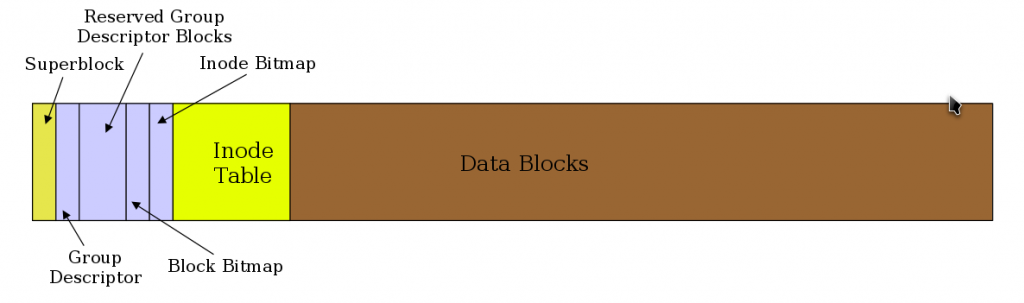

The space in each EXT2 partition is divided into cylinder groups that allow for more granular management of the data space. In my experience the group size usually amounts to about 8 MB. Figure 1, below, shows the basic structure of a cylinder group. The data allocation unit in a cylinder is the block, which is usually 4K in size.

Figure 1: The structure of a cylinder group in the EXT filesystems.

The first block in the cylinder group is a superblock which contains the metadata that defines the other filesystem structures and locates them on the physical disk. Some of the additional groups in the partition will have backup superblocks, but not all. A damaged superblock can be replaced by using a disk utility such as dd to copy the contents of a backup superblock to the primary superblock. It does not happen often, but I have experienced a damaged superblock once many years ago but I was able to restore its contents using that of one of the backup superblocks. Fortunately I had been foresighted and used the dumpe2fs command to dump the descriptor information of the partitions on my system.

# dumpe2fs /dev/sda1

|

Listing 1: The partial output from the dumpe2fs command. It shows the metadata contained in the superblock as well as data about each of the first three cylinder groups in the filesystem.

Each cylinder group has its own iNode bitmap that is used to determine which iNodes are used and which are free within that group. The iNodes have their own space in each group. Each iNode contains information about one file, including the locations of the data blocks belonging to the file. The block bitmap keeps track of the used and free data blocks within the filesystem. Notice that there is a great deal of data about the filesystem in the output shown in Listing 1. On very large filesystems the group data can run to hundreds of pages in length. The group metadata includes a listing of all of the free data blocks in the group.

The EXT filesystem implemented data allocation strategies that ensured minimal file fragmentation. Reducing fragmentation improved filesystem performance. Those strategies are described in the section on EXT4.

The biggest problem with the EXT2 filesystem, which I encountered on some occasions, was that it could take many hours to recover after a crash because the fsck (file system check) program took a very long time to locate and correct any inconsistencies in the filesystem. It once took over 28 hours on one of my computers to fully recover a disk upon reboot after a crash – and that was when disk were measured in the low hundreds of megabytes in size.

EXT3

The EXT3 filesystem had the singular objective of overcoming the massive amounts of time that the fsck program required to fully recover a disk structure damaged by an improper shutdown that occurred during a file update operation. The only addition to the EXT filesystem was the journal which records in advance the changes that will be performed to the filesystem. The rest of the disk structure is the same as for EXT2.

Instead of writing data to the disk data areas directly as in previous versions, the journal in EXT3 provides for writing of file data to a specified area on the disk along with its metadata. Once the data is safely on the hard drive, it can be merged in or appended to the target file with almost zero chance of losing data. As this data is committed to the data area of the disk, the journal is updated so that the filesystem will still be in a consistent state in the event of a system failure before all of the data in the journal is committed. On the next boot, the filesystem will be checked for inconsistencies and data remaining in the journal will then be committed to the data areas of the disk to complete the updates to the target file.

Journaling does reduce data write performance however there are three options available for the journal that allow the user to choose between performance and data integrity and safety. My personal preference is on the side of safety because my environments do not require heavy disk write activity.

The journaling function reduces the time required to check the hard drive for inconsistencies after a failure from hours or even days to mere minutes at the most. I have had many issues over the years that havonsistencies after a failure from hours or even days to mere minutes at the most. I have had many issues over the years that have crashed my systems. The details could fill another article, but suffice it to say that most were self-inflicted like kicking out a power plug. Fortunately the EXT journaling filesystems have reduced that bootup recovery time to two or three minutes. In addition, I have never had a problem with lost data since I started using EXT3 with journaling.

The journaling feature of EXT3 may be turned off and it then functions as an EXT2 filesystem. The journal itself still exists, empty and unused. Simply remount the partition with the mount command using the type parameter to specify EXT2. You may be able to do this from the command line, depending upon which filesystem you are working with, but you can change the type specifier in the /etc/fstab file and then reboot. I strongly recommend against mounting an EXT3 filesystem as EXT2 because of the additional potential for lost data and extended recovery times.

An existing EXT2 filesystem can be upgraded to EXT3 with the addition of a journal using the following command.

tune2fs -j /dev/sda1

Where /dev/sda1 is the drive and partition identifier. Be sure to change the file type specifier in /etc/fstab and remount the partition or reboot the system to have the change take effect.

EXT4

The EXT4 filesystem objectives are primarily improving performance, reliability, and capacity. To improve reliability metatada and journal checksums were added. The underlying structures of the EXT3 filesystem is essentially unchanged. To meet various mission critical requirements, the filesystem timestamps were improved with the addition of intervals down to nanoseconds. The addition of two high order bits in the timestamp field defers the 2038 problem until the year 2446 – for EXT4 filesystems, at least.

Data allocation was changed from fixed blocks to extents. An extent is described by its starting and ending place on the hard drive. This makes it possible to describe very long physically contiguous files in a single iNode pointer entry which can significantly reduce the number of pointers required to describe the location of all the data in larger files. Other allocation strategies have been implemented in EXT4 to further reduce fragmentation.

EXT4 reduces fragmentation by scattering newly created files across the disk so that they are not bunched up in one location at the beginning of the disk as many early PC filesystems did. The file allocation algorithms attempt to spread the files as evenly as possible among the cylinder groups, and when fragmentation is necessary, to keep the discontinuous file extents close to the others belonging to the same file to minimize head seek and rotational latency as much as possible. Additional strategies are used to preallocate extra disk space when a new file is created or when an existing file is extended. This helps to ensure that extending the file will not automatically result in its becoming fragmented. New files are never allocated immediately following the end of existing files which also prevents fragmentation of the existing files.

Aside from the actual location of the data on the disk, EXT4 uses functional strategies such as delayed allocation to allow the filesystem to collect all of the data being written to the disk before allocating the space to it. This can improve the likelihood that the data space will be contiguous.

Older EXT filesystems such as EXT2 and EXT3 can be mounted as EXT4 thus experiencing some minor performance gains. Unfortunately this requires turning off some of the important new features of EXT4 so I recommend against this.

EXT4 has been the default filesystem for Fedora since Fedora 14. An EXT3 filesystem can be upgraded to EXT4 using the procedure described in the Fedora documentation, however the performance will still suffer due to residual EXT3 metadata structures. The best method for upgrading to EXT4 from EXT3 is to back up all of the data on the target filesystem partition, use the mkfs command to write an empty EXT4 filesystem to the partition, and then restore all of the data from the backup.

iNode

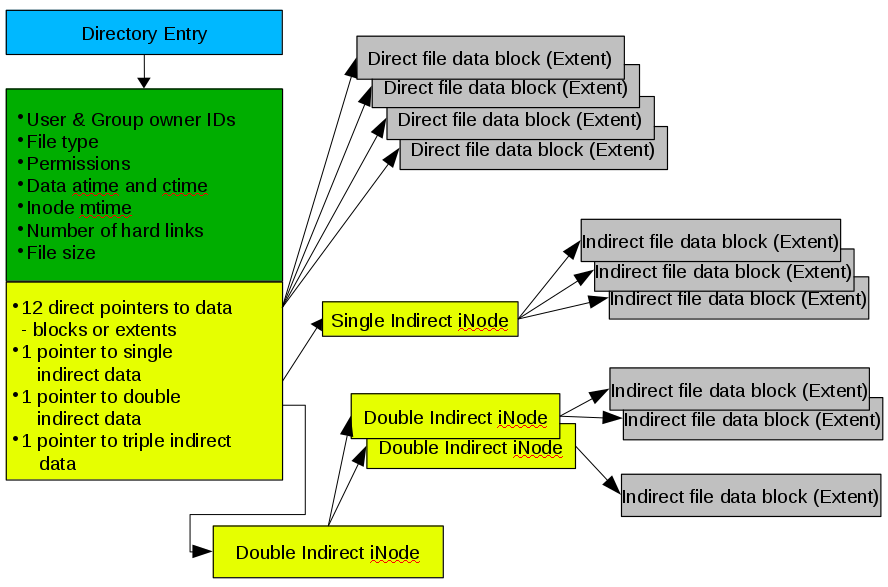

The iNode has been mentioned previously and is a key component of the metadata in the EXT filesystems. Figure 2 shows the relationship between the iNode and the data stored on the hard drive. This diagram is the directory and iNode for a single file which, in this case, may be highly fragmented. The EXT filesystems work actively to reduce fragmentation so it is very unlikely you will ever see a file with this may indirect data blocks or extents. In fact, as you will see below, fragmentation is extremely low in EXT filesystems so most iNodes will use only one or two direct data pointers and none of the indirect pointers.

Figure 2: The iNode stores information about each file and enables the EXT filesystem to locate all of the data belonging to it.

The iNode does not contain the name of the file. Access to a file is via the directory entry which itself is the name of the file and which contains a pointer to the iNode. The value of that pointer is the iNode number. Each iNode in a filesystem has a unique ID number, but iNodes in other filesystems on the same computer and even hard drive can have the same iNode number. This has implications for links that are best discussed in another article.

The iNode contains the metadata about the file including its type and permissions as well as its size. The iNode also contains space for 1the pointers provide direct access to the data extents and should be sufficient to handle most files. However, for files that have significant fragmentation it becomes necessary to have some additional capabilities in the form of indirect nodes. Technically these are not really iNodes, so I use the name node here for convenience.

An indirect node is a normal data block in the filesystem that us used only for describing data and not for storage of metadata. Thus more than 15 entries can be supported. For example a block size of 4K can support 512 4-byte indirect nodes thus allowing 12(Direct)+512(Indirect)=524 extents for a single file. Double and triple indirect node support is also supported but files requiring that many extents are unlikely to be encountered by most of us.

Data fragmentation

For many older PC filesystems such as FAT and all its variants, and NTFS, fragmentation has been a significant problem resulting in degraded disk performance. Defragmentation became an industry in itself with different brands of defragmentation software that ranged from very effective to only marginally so.

Linux’s Extended filesystems use data allocation strategies that help to minimize fragmentation of files on the hard drive and reduce the effects of fragmentation when it does occur.. You can use the fsck command on EXT filesystems to check the total filesystem fragmentation. The following example is to check the home directory of my main workstation which was only 1.5% fragmented. Be sure to use the -n parameter because it prevents fsck from taking any action on the scanned filesystem.

fsck -fn /dev/mapper/vg_01-home

I once performed some theoretical calculations to determine whether disk defragmentation might result in any noticeable performance improvement. While I did make some assumptions, the disk performance data I used were from a then new 300GB, Western Digital hard drive with a 2.0ms track to track seek time. The number of files in this example was the actual number that existed in the filesystem on the day I did the calculation. I did assume that a fairly large amount of the fragmented files would be touched each day, 20%.

Total files |

271,794 |

% Fragmentation |

5.00% |

Discontinuities |

13,590 |

% fragmented files touched per day |

20% (Assume) |

Number of additional seeks |

2,718 |

Average seek time |

10.90ms |

Total additional seek time per day |

29.63Sec |

0.49Min |

|

Track to Track seek time |

2.00ms |

Total additional seek time per day |

5.44Sec |

.091Min |

Table 1: The theoretical effects of fragmentation on disk performance.

I have done two calculations for the total additional seek time per day, one based on the track to track seek time, which is the more likely scenario for most files due to the EXT file allocation strategies, and one for the average seek time which I assumed would make a fair worst case scenario.

You can see from Table 1 that the impact of fragmentation on a modern EXT filesystem with a hard drive of even modest performance would be minimal and negligible for the vast majority of applications. You can plug the numbers from your environment into your own similar spreadsheet to see what you might expect in the way of performance impact. This type of calculation most likely will not represent actual performance but it can provide a bit of insight into fragmentation and its theoretical impact on a system.

Most of my partitions are around 1.5% or 1.6% fragmented; I do have one that is 3.3% fragmented but that is a large 128GB filesystem with fewer than 100 very large iso image files that I have had to expand several times over the years as it got too full.

That is not to say that some application environments don’t require greater assurance of even less fragmentation. The EXT filesystem can be tuned with care by a knowledgeable admin who can adjust the parameters to compensate for specific workload types. This can be done when the filesystem is created or later using the tune2fs command. The results of each tuning change should be tested,meticulously recorded, and analyzed to ensure optimum performance for the target environment. In the worst case where performance cannot be improved to desired levels, other filesystem types are available that may be more suitable for a particular workload. And remember that it is common to mix filesystem types on a single host system to match the load placed on each filesystem.

Due to the low amount of fragmentation on most EXT filesystems, it is not necessary to defragment. In any event there is no safe defragmentation tool for EXT filesystems. There are a few tools that allow you to check the fragmentation of an individual file, or the fragmentation of the remaining free space in a filesystem. There is one tool, e4defrag, which will defragment a file, directory or filesystem as much as the remaining free space will allow. As its name implies, it only works on files in an EXT4 filesystem and it does have some limitations.

If it does become necessary to perform a complete defragmentation on an EXT filesystem there is only one method that will work reliably. You must move all of the files from the filesystem to be defragmented, ensuring that they are deleted after being safely copied to another location. If possible, you could then increase the size of the filesystem to help reduce future fragmentation. Then copy the files back onto the target filesystem. Even this does not guarantee that all of the files will be completely defragmented.

Conclusions

The EXT filesystems have been the default for many Linux distributions for over 20 years. They offer stability, high capacity, reliability, and performance, while requiring minimal maintenance. I have tried other filesystems but always return to EXT. All of the places at which I have worked with Linux have used the EXT filesystems and found them suitable for all of the mainstream loads used on them. Without a doubt, the EXT4 filesystem should be used for most Linux systems unless there is a compelling reason to use another filesystem.